|

Jian-Jian Jiang (蒋艰坚) Ph.D. student School of Computer Science and Engineering Sun Yat-sen University jiangjj35@mail2.sysu.edu.cn |

BiographyI'm currently a Master-Ph.D. consecutive degree student at Sun Yat-sen University, advised by Prof. Wei-Shi Zheng, where I cultivate the interest in research, and develop the scientific ability and taste of it. Previously, I obtain my B.E. degree in Hunan University. |

Research InterestsMy current research interests focus on the efficient and reliable learning of grasping skills while ensuring their deployability in the real world. At the same time, I am actively studying how to facilitate the robot to obtain generalizable, precise and complex manipulation skills. |

PublicationsBelow are my publications. My first author works are highlighted. (This page includes papers in arXiv, & means equal contribution, * refers to corresponding author.) |

AI Robotics |

|

|

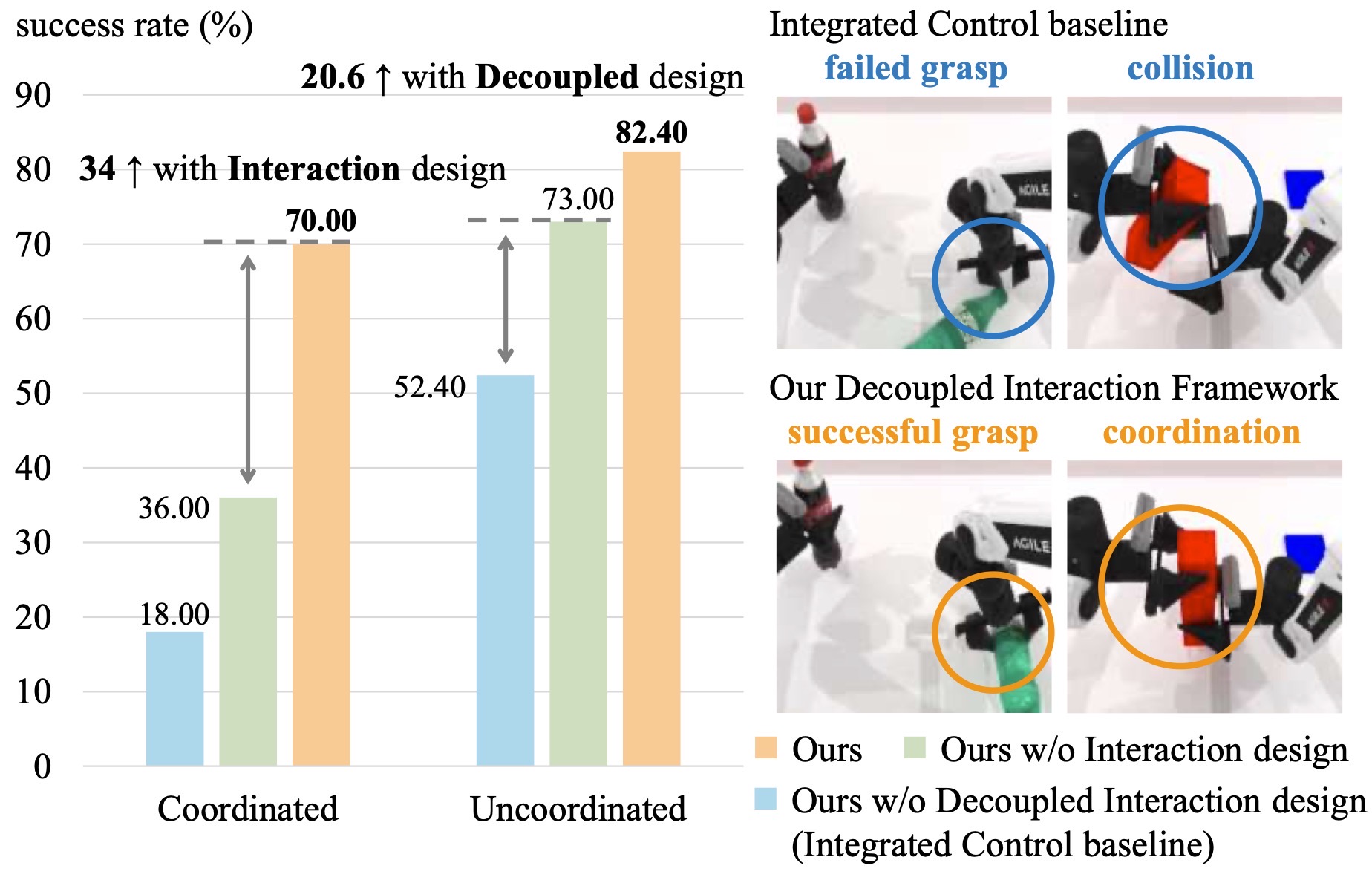

Rethinking Bimanual Robotic Manipulation: Learning with Decoupled Interaction Framework.

Jian-Jian Jiang , Xiao-Ming Wu, Yi-Xiang He, Ling-An Zeng, Yi-Lin Wei, Dandan Zhang, Wei-Shi Zheng*. International Conference on Computer Vision (ICCV), 2025. paper We propose a decoupled interaction framework that categorizes bimanual manipulation tasks into non-collaborative and collaborative types, and performs both effectively. |

|

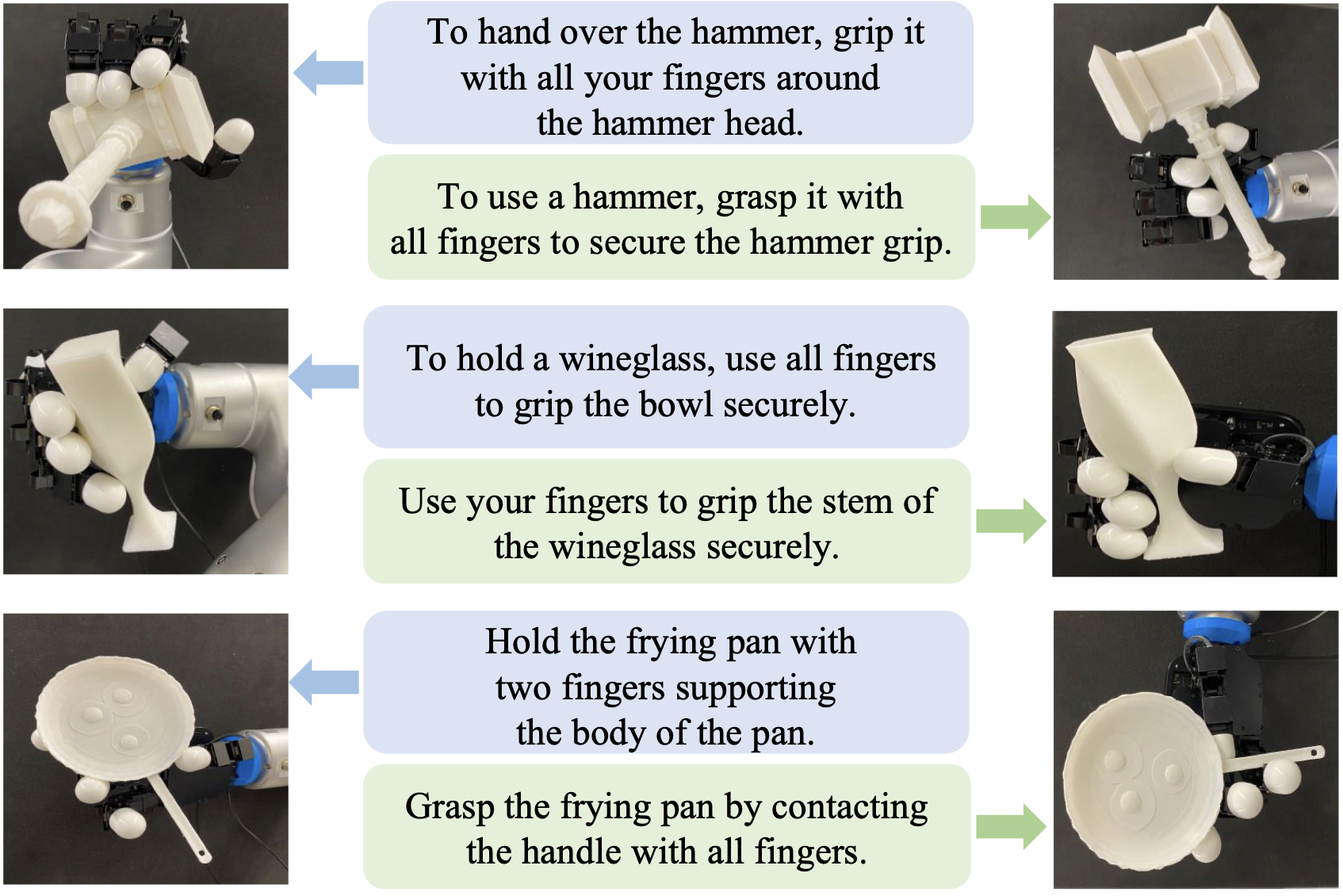

Grasp as You Say: Language-guided Dexterous Grasp Generation

Yi-Lin Wei, Jian-Jian Jiang, Chengyi Xing, Xiantuo Tan, Xiao-Ming Wu, Hao Li, Mark Cutkosky, Wei-Shi Zheng*. Neural Information Processing Systems (NeurIPS), 2024. paper We propose a novel task "Dexterous Grasp as You Say" (DexGYS), with a benchmark and a framework, enabling robots to perform dexterous grasping based on human commands expressed in natural language. |

|

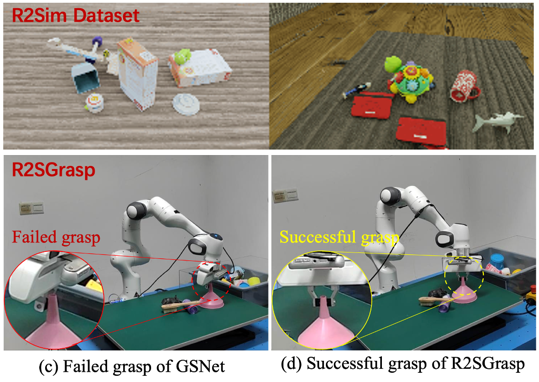

Real-to-Sim Grasp: Rethinking the Gap between Simulation and Real World in Grasp Detection

Jia-Feng Cai, Zibo Chen, Xiao-Ming Wu, Jian-Jian Jiang, Yi-Lin Wei, Wei-Shi Zheng*. Conference on Robot Learning (CoRL), 2024. page / paper We propose a Real-to-Sim framework for 6-DoF Grasp detection, named R2SGrasp, with the key insight of bridging this gap in a real-to-sim way, and build a large-scale simulated dataset to pretrain our model to achieve great real-world performance. |

|

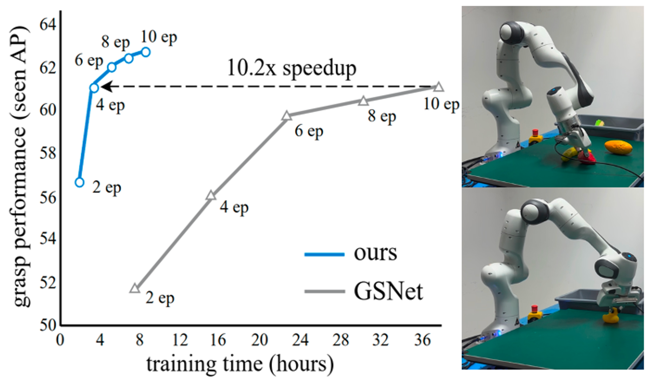

An Economic Framework for 6-DoF Grasp Detection

Xiao-Ming Wu&, Jiafeng Cai&, Jian-Jian Jiang, Dian Zheng, Yi-Lin Wei, Wei-Shi Zheng* European Conference on Computer Vision (ECCV), 2024 paper / code We propose a new economic grasping framework for 6-DoF grasp detection to economize the training resource cost and meanwhile maintain effective grasp performance, which consists of a novel label selection strategy and a focal module to enable it. |

|

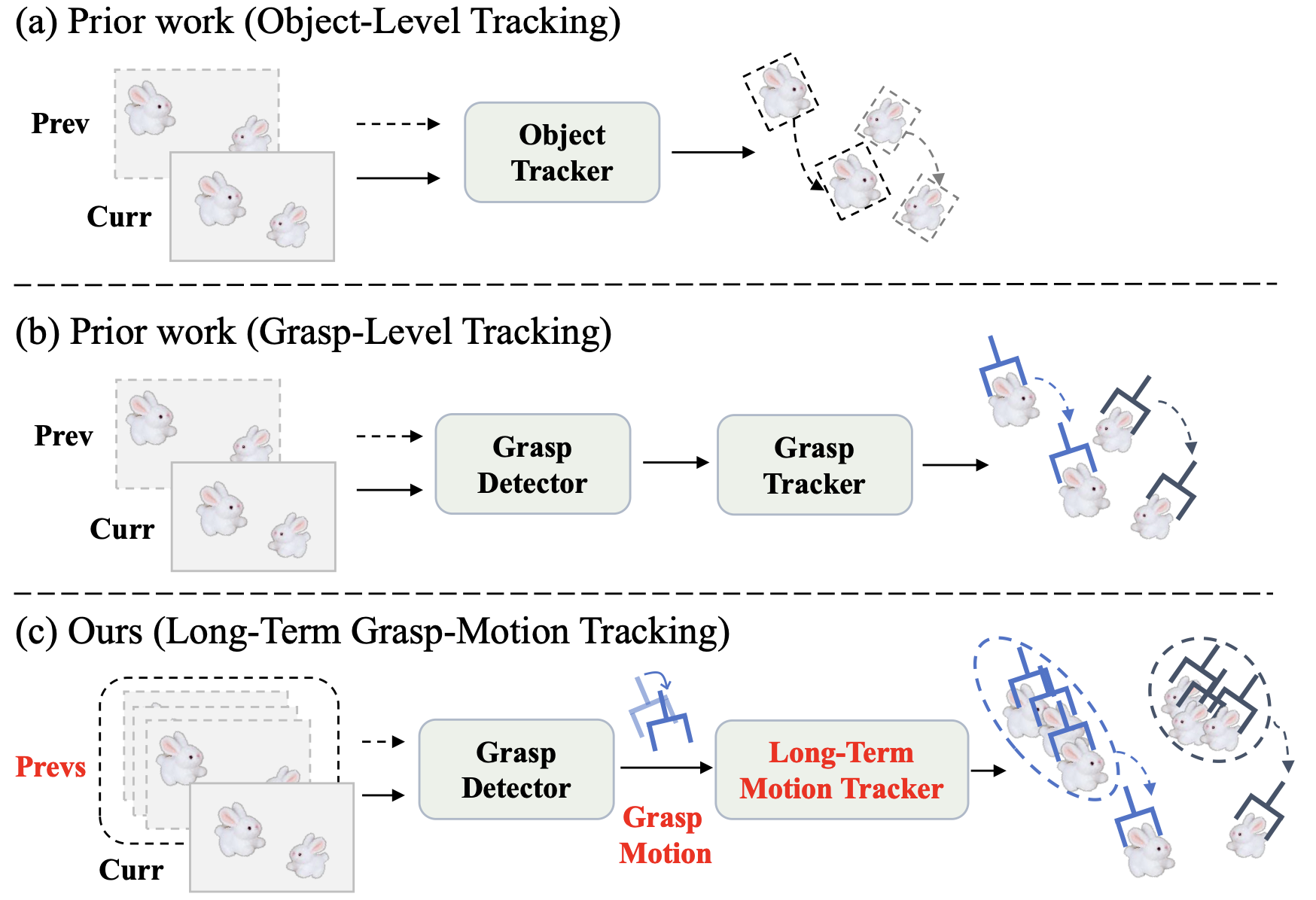

MotionGrasp: Long-Term Grasp Motion Tracking for Dynamic Grasping.

Nuo Chen&, Xiao-Ming Wu&, Guo-Hao Xu, Jian-Jian Jiang, Zibo Chen, Wei-Shi Zheng*. Robotics and Automation Letters (RA-L), 2024. paper We design a new grasp tracking framework for dynamic grasping, fully considering long-term trajectory information and using Grasp Motion to model it well, which consists of a motion association and a motion alignment module to enable it. |

Academic Service

Conference Reviewer:

CoRL, 2025

|